Hi all,

I’ve seen a lot of use of the .local directory in SWAN, mostly through pip --user, for user-installations. Indeed, it is mentioned in the SWAN help at https://github.com/swan-cern/help/blob/master/advanced/install_packages.md.

Given that the .local directory is automatically added to the Python path for all subsequent Python executions (not just the one that you did the installation with), it is fair to say that doing user installations in this way has a global effect for the user. This may not be obvious to the user, and can very easily lead to confusion, a lack of reproducibility and can easily lead to the situation where a notebook works one day but not the next (because another notebook modified the environment).

Python provides tools for creating virtual environments to avoid this global installation problem, but because of the nature of Jupyter, it is hard (but by no means impossible  ) for SWAN to pick up a user-installed Jupyter kernel from a venv. One solution is to create a venv and then to add the venv’s site-packages to the running kernel’s Python path.

) for SWAN to pick up a user-installed Jupyter kernel from a venv. One solution is to create a venv and then to add the venv’s site-packages to the running kernel’s Python path.

With this in mind, the following steps would be all that you need to create an isolated environment and be able to install whichever packages you need (without that pesky global effect!):

- create a venv (subprocess) in a particular location (I chose

$HOME/python/environments) - add the venv to the sys.path

- pip install into the venv (subprocess)

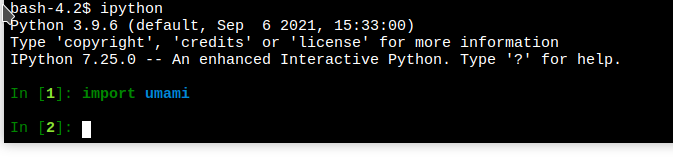

Of course, this isn’t quite as appealing in terms of simplicity than simply !pip install --user --upgrade <my-chosen-package>. I therefore have wrapped it up into a simpler command at https://gitlab.cern.ch/pelson/swan-run-in-venv. And it boils down to:

!curl -s https://gitlab.cern.ch/pelson/swan-run-in-venv/-/raw/master/run_in_venv.py -o .run_in_venv

%run .run_in_venv <my-venv> -m pip install <my-chosen-package>

It wouldn’t be hard to make this even more concise, for example %venv_install <my-venv> flake8, in the future (with SWAN pre-installation of said magic).

I’m wondering if there is appetite for encouraging this approach, rather than using the global .local directory as is the current recommendation? Of course, one major implication of this is that every notebook which has non-standard dependencies would need to have the command as the first (executed) code-cell as we would no longer be modifying the installed packages globally (for a user).

Cheers,

Phil