Dear SWAN users,

We noticed interest on productionizing Spark notebooks for analytics pipelines, versioned with git.

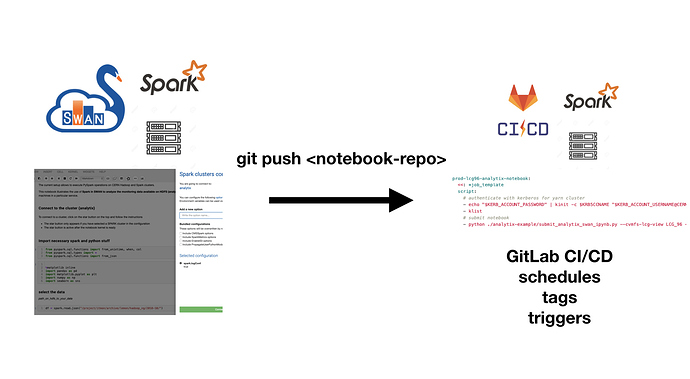

The proposed pipeline will consist of prototyping a Spark job in SWAN in interactive mode, committing to git (e.g. GitLab) repository shared across many team members, and designing Gitlab CI/CD pipelines that would submit and manage your Spark Notebook jobs in a batch mode.

For our internal purposes at IT-DB-SAS, we daily schedule set of Spark Notebooks to check LCG software, cluster reliability and Hadoop client configuration compatibility with a set of notebooks. The example repository can be found here https://gitlab.cern.ch/db/swan-spark-notebooks/tree/v0.1/ (you need to be logged in to CERN GitLab).

In the above example, we developed Python script that converts SWAN .ipynb file into set of Spark configurations and .py code.

You can use these examples as a reference implementation for your own pipelines:

- submit_analytix_swan_ipynb.py

- submit_nxcals_swan_ipynb.py

- submit_k8s_swan_ipynb.py

- submit_k8s_dockerfile_ipynb.py

Next step is to create .gitlab-ci.yml that would setup your pipelines. The example analytix submission would be defined as follows:

> .gitlab-ci.yml

prod-lcg96-analytix-notebook:

tags:

- cvmfs

image:

name: ${CI_REGISTRY_IMAGE}:latest

entrypoint: ["/bin/sh", "-c"]

script:

# authenticate with kerberos for yarn cluster

- echo "$KERB_ACCOUNT_PASSWORD" | kinit -c $KRB5CCNAME "$KERB_ACCOUNT_USERNAME@CERN.CH"

- klist

# submit notebook

- python ./analytix-example/submit_analytix_swan_ipynb.py --cvmfs-lcg-view LCG_96 --cvmfs-lcg-platform x86_64-centos7-gcc8-opt --appid prod-lcg96-hostmetrics-notebook-$RANDOM --ipynb ./analytix-example/analytix-hostmetrics-example.ipynb --hadoop-confext /cvmfs/sft.cern.ch/lcg/etc/hadoop-confext

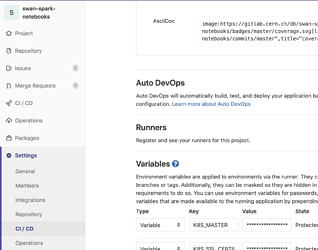

You can configure some advanced specifics of your pipelines in Gitlab CI/CD interface (please check https://docs.gitlab.com/ee/ci/variables/#custom-environment-variables for more info). One example are some environment variables used to authenticate the submitting user. In our case, env KERB_ACCOUNT_USERNAME represents the submitting user as a service account hconfig@CERN.CH and service account password.

The example for a full pipeline (that includes running many notebooks, checking the return code, and triggering alert to Mattermost channel) can be found here https://gitlab.cern.ch/db/swan-spark-notebooks/tree/v0.1/.gitlab-ci.yml

Using Git and GitLab pipelines could be very interesting solution to productionize your Spark applications that are fully controlled and orchestrated by git.

Please contact swan-admins@cern.ch for consulting regarding setting up such a production pipelines